AI has recently experienced a giant leap in technological terms, and this is mostly due to OpenAI and the introduction of ChatGPT to the public. OpenAI has created an utterly convincing human-like language processing model in GPT-3 and more recently GPT-4. ChatGPT at the basic level can hold a conversation. But, at more advanced levels, it can write copy for marketers, develop contextual strategies for businesses, write, optimise and understand nearly all programming languages, generate images, videos and so much more.

Now with OpenAI offering APIs for businesses to leverage GPT-3 or (the slightly pricier!) GPT-4 within their own value propositions. This has led to Microsoft beginning to implement GPT into Bing and Salesforce with the more uniquely named, for better or worse, ‘Einstein GPT’. But you’ve all probably heard this before so in an attempt to shake it up I want to look at the impact of these advanced language models on organisations in general and dive deep into the potential impact on their culture and people.

A range of AI platforms utilising GPT

Regarding business performance, AI has an extremely clear role to play in teams. AI will be able to aid in the gathering of data and even the analysis thereafter. It can quickly find trends and patterns to help teams optimise and improve their performance. There is a plethora of tools that have been created off the back of GPT and here are a few that stand out:

- Magical AI: This AI, as of writing, uses GPT-3.5 and its purpose is to automate the small tasks that can contribute a lot of time over your working day. The AI can respond to customer emails while conveying the personality of the sender. It has use cases in sales, recruiting, customer support and healthcare. People report saving hours of time by using this AI within their working day.

- Durable AI Website Builder: This AI can build a website in ‘30 seconds’ for around $12 a month. Comparing this price to a traditional website developer proves the value. Over 1.3 million websites have been built using this AI so it is clear the value this AI offers, and this over time could change how we think about website creation and save businesses loads of time.

- Publer: This platform uses AI to allow you to scale your social media activity it assists in brainstorming and tailoring your content to specific social media platforms and can even generate content based on your organisation’s tone.

The list could go on forever with, quite literally, 100s of different platforms looking to solve different problems. It is abundantly clear how AI can support teams across an organisation. Striking the balance of when they are appropriate and to what extent is the real challenge.

AI supporting high-performance teams

The integration of these platforms into an employee’s standard workflow is also a challenge in itself. The technical integration can be complex, time-consuming and expensive depending on your organisation’s existing systems and processes. Data quality also plays a factor as the data used by the AI can impact the accuracy which could lead to huge mistakes if the AI is being used in workflows throughout the organisation.

And looking more on the people side, the implementation of these tools could be met with resistance as a lot of employees may be unfamiliar with the tools due to them being such a new development. It will be crucial moving forward for organisations to behave in a transparent way when trying to implement these technologies. This is to ensure an elevated level of trust is maintained and employees are open to the adoption of these platforms. And finally, the most obvious drawback and arguably one of the most talked about in the media is AI and its privacy issues.

AI relying on data is its strength and its weakness. AI image creation has been producing images that are copyrighted or of people that haven’t provided consent, and this presents a huge problem. And this issue is not limited to just the image AI as the text-based platforms could also spit out sensitive information that it has learned from other users. I believe that this is going to act as one of the biggest decelerators for AI and this is evidenced in the recent open letter signed by Elon Musk, Steve Wozniak and Max Tegmark, to name a few.

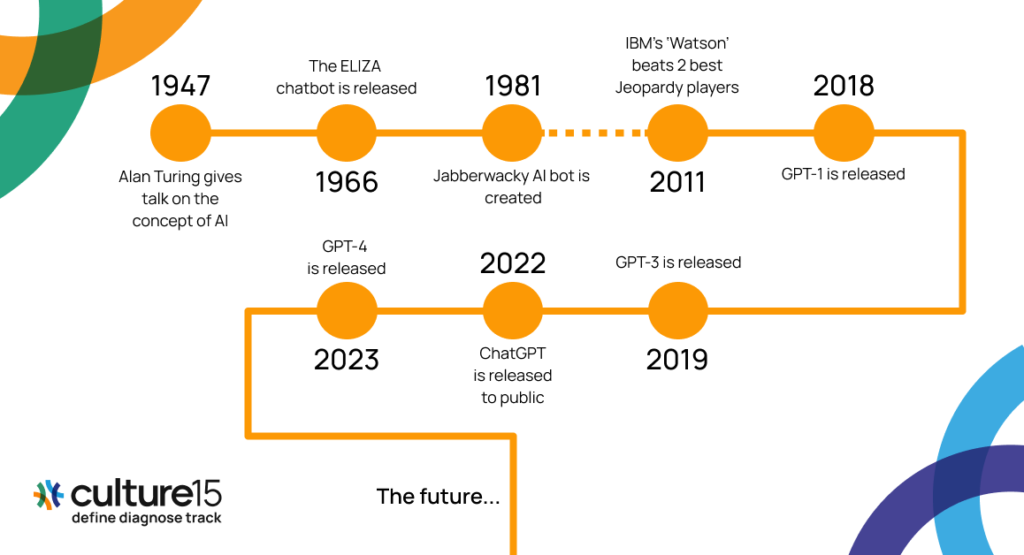

A brief chronology of AI

The threats of AI

I’ve spoken quite highly about AI and its benefits so far. But it is only fair to also discuss the drawbacks concerning organisations and their culture and people. With the increase in the use of AI for everyday operations, the threat of the risks posed also increases. Some of these threats include:

- Job displacement: this threat is often the most talked about and many industries are threatened by AI. The most prevalent examples are content creators, programmers, data analysts, technical marketers and legal roles like paralegals and legal assistants. These roles all involve tasks that can be managed by AI now and this, therefore, raises the question of whether the roles are truly necessary and whether there is an opportunity for organisations to take this opportunity to upskill their employees to utilise AI in their workflows to greatly increase output.

- Bias: AI can perpetuate existing biases as the AI uses existing datasets that contain said biases. This could lead to inaccurate, unfair and/or discriminatory outputs which is dangerous territory for organisations.

- Lack of transparency: AI systems can be difficult to understand which can bring trust into question when using AI to help with the decision-making process. This ultimately would have a negative impact on not only an organisation’s culture but also its employees’ morale.

- Loss of privacy: this point was mentioned in one of the earlier sections but still needs to be highlighted. The data used could lead to AI beginning to infringe on copyright and lead to sensitive information being displayed to other users. This poses huge risks to certain industries like the healthcare sector which holds patient confidentiality as an extremely high priority and therefore they may struggle to find a use case for AI in their organisations.

Conclusion

To conclude, the advancements in AI technology, specifically with the introduction of GPT-3, GPT-4, Bard, and other AI tools, have greatly impacted organisations’ cultures and people. AI can aid teams in gathering and analysing data, perfecting performance, and automating tasks to save time. However, integrating these tools into an employee’s workflow may be met with resistance, and data quality and privacy concerns must be taken into consideration. Additionally, the increasing use of AI for everyday operations poses threats such as job displacement, perpetuating existing biases, lack of transparency, and loss of privacy. Organisations must navigate these challenges while balancing the benefits and drawbacks of AI to effectively use these tools and positively affect their culture and people.

Ultimately the case for AI depends on whether we are willing to accept and manage the risks that come with its development and prioritising the advancement of AI. Or do we cut the risks first but as a result hinder the advancement of AI technologies? I’m sure we all have our opinions, and they all have their merits, but I know I’ve already implemented AI in some of my workflows and it has improved the efficiency of a lot of my daily tasks.

This would be where the author of the article tries to bamboozle you with the fact that ChatGPT wrote the whole blog unfortunately this isn’t the case… or is it? (It isn’t)

Culture15 is your complete toolkit for tracking culture change. CEOs and Exec Teams at world-leading organisations use Culture15 analytics to ensure success by aligning their culture with what they need to execute their strategy. If you’d like to find out how to define the culture and values you need, diagnose the culture you have and close the gap, talk to our team.